Everyone’s talking digital transformation in today’s volatile, uncertain. complex and ambiguous business landscape. We all want our organisations to keep relevant, reinvent themselves and avoid going the way of a Thomas Cook or a Kodak. To support the transformational change that’s required enterprises have been talking app modernisation for a while, and moving business processes to the Cloud, sometimes “as is” and sometimes by redeveloping them from scratch. Today, both in terms of cost and agility, using Cloud technology for new developments is a given, but for most organisations there is no one right Cloud. We live in a Hybrid Cloud World whether we like it or not. Depending on the size of your organisation, from medium to large, according to the Rightscale State of the Cloud survey, you might be dealing with 5 different Clouds, along with the business critical systems you are, most likely, still running in your data centre. Even a born in the Cloud start up usually has more than just one Cloud/SaaS platform to drive their business. There is no single Cloud platform that has all the answers, and the three major Public Cloud providers are adding features and functions to their platforms continuously. How do we manage that Multicloud challenge? There is no one answer to that either, but a few days ago I heard HPE’s new angle on looking at the problem from the data layer, which ought to be the starting point for thinking about business solutions in any case.

The ingredients of their solution, in my mind, involve a combination of data abstraction and 3 Cs – Cloud, Containers and Choice. Let me explain their product and what I mean in a little more detail.

HPE Cloud Volumes

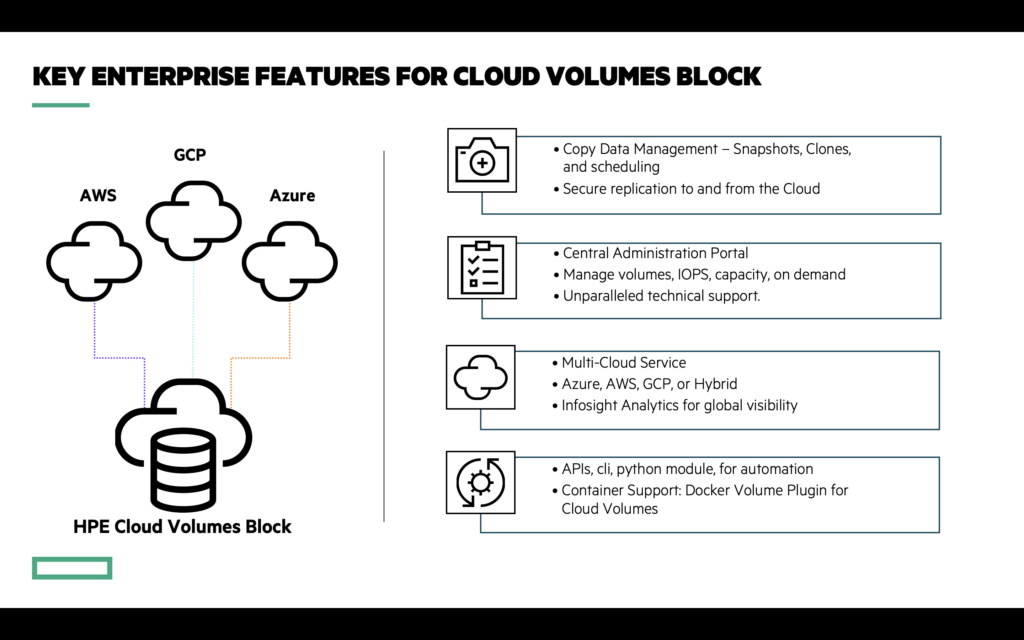

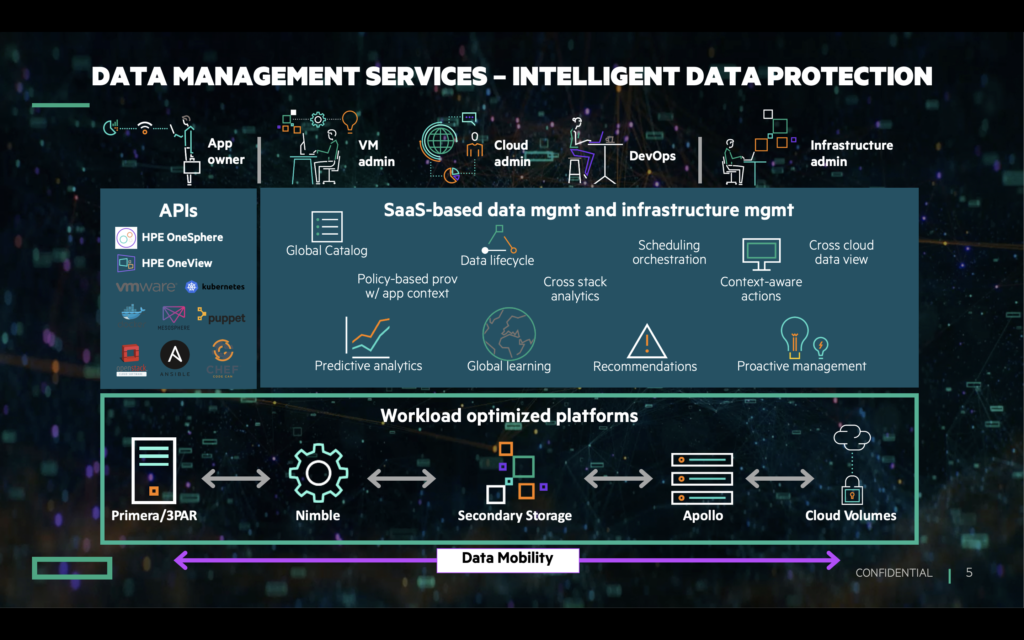

HPE explained their new Cloud Volumes series of data and management services at a workshop run by Nick Dyer, their Field CTO for Nimble and Intelligent Storage, and Tony Stranack, their EMEA Head of Information and Data Strategies. The problems they are trying to address are common across the Multicloud enterprise. They want to allow portability between the various Public Cloud options and/or on premises hardware so customers can choose the right tool for the job both now, and over time as platforms, circumstances and costs change. They want to provide those services with enterprise grade resilience and availability. They want to make the data repository itself easy to manage and in a unified way across the options. Above all they want to give customers choice and flexibility, whether you are working on existing mission critical apps, or developing new apps with an agile and DevOps mode of develop and deployment.

Nick asked the question “where is the right place for my data” and then went on to explain that data always has “gravity”. By that he means that data is bound by the constraints of where and how it was created, and how it is being stored. Depending on that context, there are various factors “pulling” at that data if and when you want to move it and use it.

Ingress and Egress

The biggest pull is Ingress and Egress, now a normal part of our cloud terminology, but why don’t we just say in and out? Putting my quibble about words aside, we are talking about the costs of getting your data in to and out of the major Cloud provider’s platforms. For Microsoft Azure, Amazon Web Services and Google Cloud Platform moving your data in to their platform doesn’t cost a thing. Of course, they charge you for the storage you use, and they hope you stay a long time, but then they charge you when you want to move that data out of their platform, back on premise or to some other destination. The costs can be significant.

Data Abstraction

With the Cloud Volumes service your data is held in a single repository that is logically connected to your on-premise compute, or to any of the 3 Public Cloud Services. This brings significant benefits in both time and cost. Because the data isn’t being physically moved, there are no egress charges and no elapsed time for the data to move. This gives you all the flexibility and portability between platforms that you need, with the advantage that HPE only bills you for exactly the amount of storage and management services you consume.

Enterprise Grade Availability

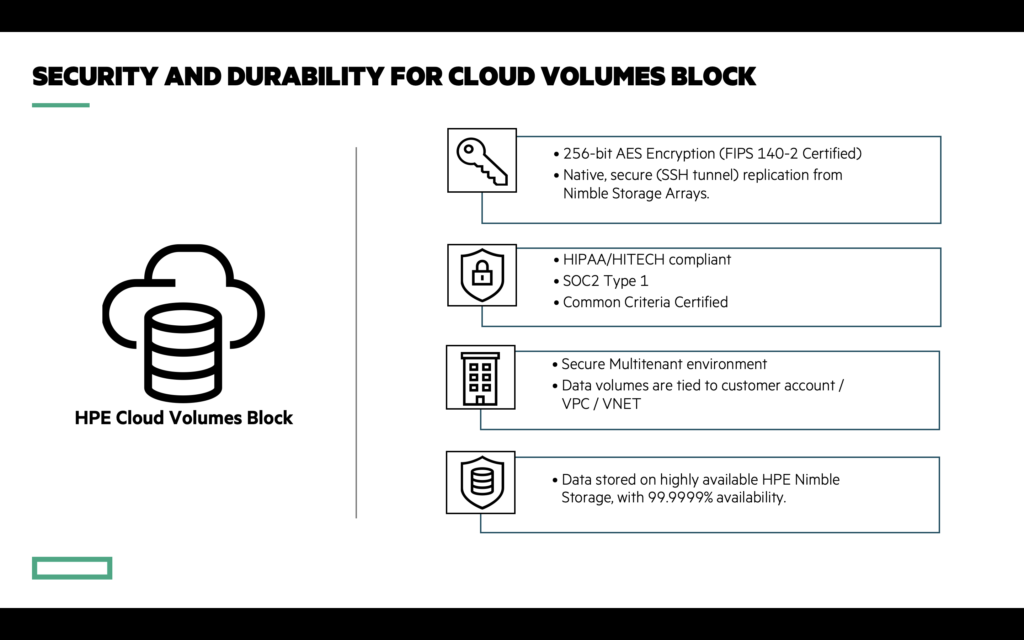

You need enterprise grade security, resilience and availability. The service uses HPE’s Nimble storage, designed for low latency with 256-bit AES encryption and 99.9999% availability.

Potential Solutions

The key benefits the approach drives are choice and flexibility. Cloud Volumes allows you to move workloads and data from on-premises to any cloud (and back) simply and efficiently, helping you avoid being locked in to the first Public Cloud you chose. It allows you to develop natively in Cloud and deploy on-premises or vice versa. You could run production on-premises but apply AI and analytics logic in the Cloud adding the ability to scale capacity up and down as necessary. The service allows you to run multiple instances across several Clouds and on-premises simultaneously. You could run production on-premises but recover in the Cloud. It allows you to spin up a new instance to try something in seconds.

Data Management

Cloud Volumes allows choice on management of the data service too, as well as providing a consistent approach across Cloud and on-premises. You can use their portal, a Software as a Service based data management approach, as well as command line or cloud first APIs. The service embraces Docker and Kubernetes to support the kind of Continuous Integration, Continuous Delivery approach to allow you to release more, faster and better – to develop once and deploy anywhere.

Underpinning the service is HPE’s InfoSight. This is an AI based tool that analyses and correlates millions of sensors from all of their globally deployed systems. It constantly watches over your particular environment but has learned from managing the entire HPE customer hardware estate to predict problems. If it uncovers an issue, it resolves the issue and prevents other systems from experiencing the same problem. It continuously learns so it gets better and more reliable over time. It takes the guesswork out of managing infrastructure and simplifies planning by accurately predicting capacity, performance, and bandwidth needs. Pretty smart.

Conclusion

Cloud Volumes provides a new angle on the Multicloud management problem that every enterprise faces. By separating out the data it addresses a key cost and time issue as you are moving your data between platforms logically, not physically. It simplifies the options for developing new cloud first apps, dealing with mission critical systems, disaster recovery, fail over and more. It’s a set of tools that helps you choose the right Cloud, use a modern containerised approach, and allow you to change your Cloud or on-premises choice as the cost equation or other factors change. From what I saw at the workshop it’s well worth exploring, and we hear there will be more announcements around the service coming very soon.

Check back here once we’ve had that briefing, or contact me if you want more detailed advice now.

Views from my colleagues who also attended the Cloud Volumes workshop:

- Richard Arnold’s take

- Bill Mew interviewed Nick Dyer

- Ian Moyse thoughts TBA

Hewlett Packard Enterprise is a customer and includes me in their global influencer programme.